When the Times Higher Education (THE) world university rankings were published yesterday, it was no surprise to see many of the usual suspects in its top 10, including the California Institute of Technology, Harvard, Oxford and Cambridge. The QS rankings published two weeks ago put MIT in top place, closely followed by Cambridge, Imperial, Harvard and Oxford.

Both these lists (and the Shanghai Academic Ranking of World Universities) are dominated by UK and US universities. But there’s an interloper: Switzerland, with a population of only eight million, has two universities, the Swiss Federal Institute of Technology (ETH Zurich) and the École Polytechnique Fédérale de Lausanne, in the top 20 in the QS rankings and seven in the top 200 in both the QS and THE rankings.

It performs better than many bigger countries with successful economies. So what is the secret of the country’s success?

To answer that requires an understanding of how rankings work. Each of the three best-known ranking systems uses a set of indicators allocated a weighting: in the QS rankings, the biggest weighting, based on a survey of academics is given to academic reputation (40%), followed by faculty/student ratio (20%), citations per faculty (20%), employer reputation, based on a survey of employers (10%), international student ratio (5%) and international staff ratio (5%).

Switzerland’s success partly reflects the country’s heavy investment in research: it spends 2.2% of its GDP on research and development, double the EU average of 1.1%.

Ben Sowter, head of the QS intelligence unit, says that it has been given an added boost by the presence of the Large Hadron Collider in Geneva, giving the country’s institutions the opportunity to benefit from collaborations with leading universities worldwide. This, he says, has “dramatically magnified their research impact and influence at a global scale”.

The strong research performance of the top Swiss institutions enables them to attract the best international staff, helping them do well on that particular indicator. But the country’s location in the centre of western Europe also makes Switzerland attractive to international staff and students: it has borders with five countries, and three principal national languages (French, German and Italian). As a result, 21% of university students come from abroad.

Ellen Hazelkorn, director of research and enterprise at the Dublin Institute of Technology, notes that Switzerland’s size gives it an advantage on the international staff indicator: “If you’re a small country, you probably have more people who got their doctorate in another country.” Some international staff can even commute to work in a Swiss university from across one of the borders.

Like a number of other institutions in the QS top 20, both ETH Zurich and the École Polytechnique Fédérale de Lausanne, specialise in technology and science. Scientists all over the world tend to publish in English, and because QS’s citations indicator is based on Elsevier’s Scopus database, which has a bias towards English-language publications, this gives an advantage to institutions focused on science – academics in the humanities often prefer to publish in their own language.

Scientists also tend to publish in journals, while humanities academics often publish their work in book chapters or monographs, which are not included in Scopus – again giving an advantage to scientifically-based institutions.

Frank Ziegele, director of Germany’s Centre for Higher Education, says: “If you’re a university focused on humanities you never have the chance to be on top in the QS rankings.”

Some academics are highly sceptical about the usefulness of the indicators used to compile rankings: Hazelkorn argues that a high proportion of international students could just as easily decrease a university’s quality as increase it.

The usefulness of faculty-staff ratio as a proxy for teaching and learning is also contentious: Hazelkorn describes it as “completely meaningless and a hugely disputed measure of anything having to do with quality,” though Sowter argues that “faculty-student ratio is necessary, even in an increasingly digitised world, if you’re going to be able to provide enough academic support for your students.”

The bigger difficulty with the rankings is that of the “halo” effect where, in the words of Simon Marginson, professor of international higher education at the Institute of Education, “existing reputation drives judgement”.

He says: “New institutions can’t crack the top group because reputations continue to recycle, and it protects their material position, because it keeps the research money flying in. Students and staff of quality keep wanting to go there and that sustains the quality.”

Is there another way? Marginson believes that the Leiden rankings, which use single (rather than aggregated) indicators, and draw on hard data rather than surveys, provide a more accurate methodology for assessing universities’ research performance.

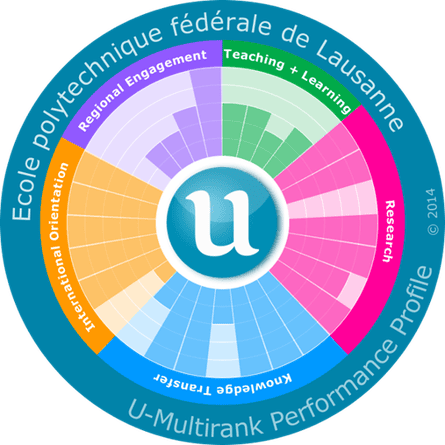

The new U-Multirank site, developed by Ziegele and his colleagues, does away with rankings, instead showing profiles for individual universities, with a clear measure for each indicator: users can compare how two universities perform on different indicators. While ETH Zurich, for example, retains an impressive research score, only 61% of new entrants successfully complete their bachelor degree. Although U-Multirank is potentially a rich source of data, many of the top universities have so far refused to provide data, compromising its usefulness.

Rankings are undoubtedly seductive. But there is a danger that by aggregating different measures, we smooth over important differences in individual areas. We also run the risk that universities – and countries – become so fixated on rankings that they expend their efforts on activities designed to manipulate their place in them instead of concentrating on the quality of higher education as a whole, says Hazelkorn: “The percentage of students who attend these [top-ranking] institutions is a fraction, less than 1%. The real focus should be on whether our systems are world-class.”

Join the higher education network for more comment, analysis and job opportunities, direct to your inbox. Follow us on Twitter @gdnhighered.

Comments (…)

Sign in or create your Guardian account to join the discussion